The Dawn of a New AI Era: More Than Just Words

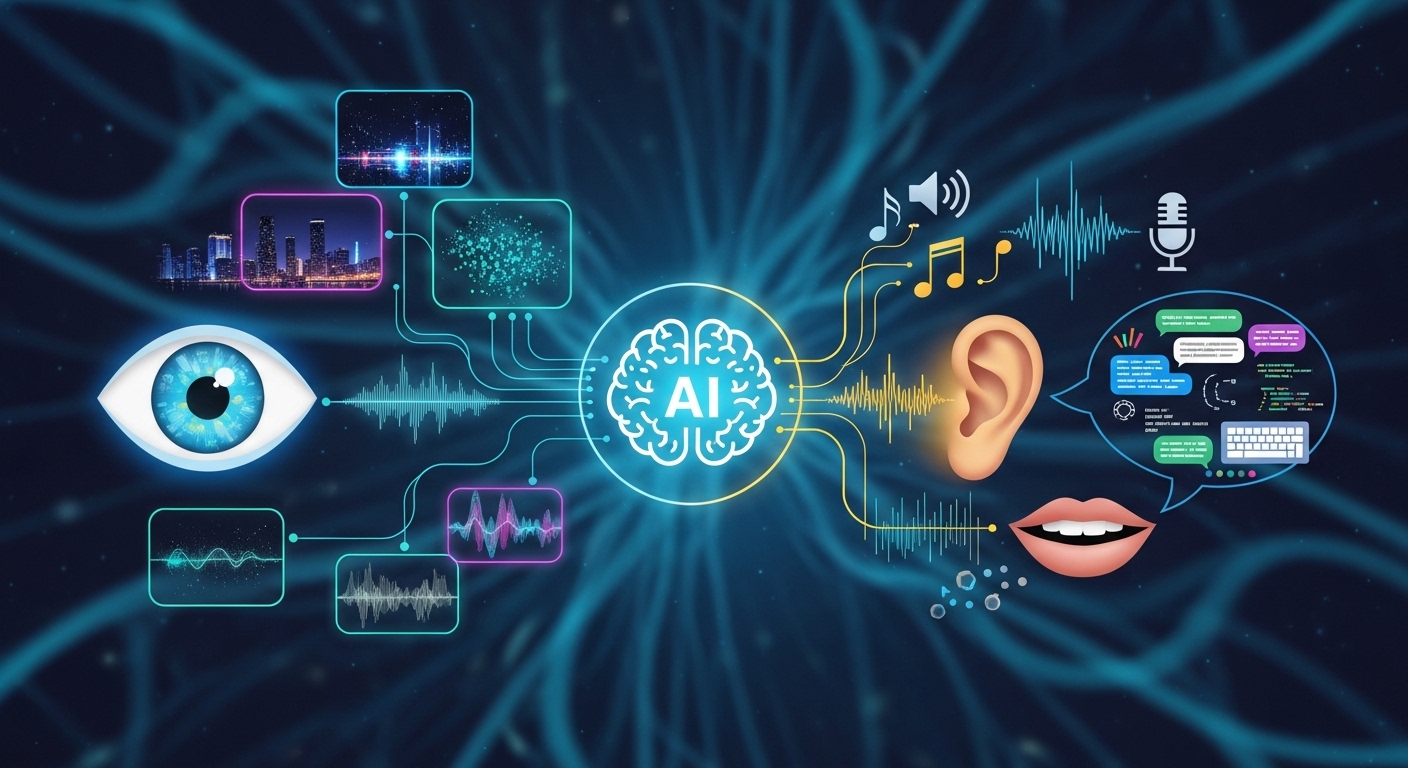

For the past few years, the world has been captivated by the power of large language models. We’ve watched in awe as tools built on ChatGPT & generative AI have written poetry, drafted emails, and coded complex software. But we were primarily interacting with them through a single medium: text. We typed, and they typed back. That era is rapidly coming to a close. We are now standing at the precipice of the multimodal revolution, a seismic shift where AI can see, hear, and speak, fundamentally changing how we interact with technology forever.

Imagine pointing your phone’s camera at a strange-looking plant in your garden and asking your AI assistant, “What is this, and is it safe for my dog?” and getting an instant, spoken answer. Picture collaborating on a design project where you sketch an idea on a napkin, and an AI instantly turns it into a digital wireframe while listening to your verbal instructions for changes. This isn’t science fiction; it’s the reality being built today by advancements in multimodal generative AI.

What Exactly is Multimodal AI?

At its core, multimodal AI refers to artificial intelligence systems that can process, understand, and generate information from multiple types of data—or “modalities”—simultaneously. It breaks down the barriers between different forms of information, allowing AI to build a richer, more contextual understanding of the world, much like humans do.

Beyond Text: Understanding the Inputs

Humans naturally experience the world multimodally. We read text, see images, hear sounds, and speak to communicate. Our brains seamlessly integrate these streams of information to form a cohesive understanding. Traditional AI, however, was often specialized. An AI could be an expert at image recognition or a master of natural language processing, but rarely both. Multimodal AI bridges this gap. The key modalities include:

- Text: The foundation of traditional language models.

- Images: Still photos, diagrams, sketches, and even handwriting.

- Audio: Spoken language, music, and ambient sounds.

- Video: A combination of moving images and audio, offering dynamic context.

By training on vast datasets containing all these formats, models learn the intricate relationships between them. They learn that the word “dog” corresponds not only to other related words but also to millions of images of dogs and the sound of a bark.

The Leap from Single-Modal to Multimodal

The evolution from single-modal to multimodal is a monumental leap. A single-modal text AI, like an earlier version of ChatGPT, could write a detailed description of a sunset. A multimodal AI can look at a photo of a specific sunset you took, analyze its colors and composition, and then write a poem about that very sunset, perhaps even setting it to a piece of generated music that matches its mood. This ability to ground understanding in multiple data types creates an experience that is not only more powerful but also significantly more intuitive and human-like.

The Game-Changers: Models Like GPT-4o

The recent unveiling of models like OpenAI’s GPT-4o (the ‘o’ stands for ‘omni’) and Google’s Gemini family represents a watershed moment for ChatGPT & generative AI. These models were designed from the ground up to be natively multimodal, and the results are astonishing.

Real-Time Conversation and Vision

Perhaps the most impressive feature of these new models is their speed and fluidity. Previous attempts at multimodal interaction often felt clunky, with noticeable delays as the system processed different data types. Now, you can have a real-time spoken conversation with an AI that is also looking at what your camera sees. You can interrupt it, ask follow-up questions, and get responses with human-like latency and intonation. This capability transforms the AI from a simple tool into a genuine collaborative partner.

Lowering the Barrier to Entry

Another crucial aspect is accessibility. These powerful multimodal capabilities are being integrated into the free tiers of popular AI platforms. This democratization of advanced AI means that students, artists, small business owners, and everyday users can now leverage technology that was once the exclusive domain of major research labs. This widespread access is sure to ignite a new wave of innovation as millions of people discover novel ways to use these tools.

Practical Applications of Multimodal Generative AI

The theoretical power of multimodal AI is impressive, but its true impact will be felt in its real-world applications across countless industries.

Revolutionizing Education

Imagine a personal tutor that can help a child with their math homework. The child can point their camera at a difficult problem, and the AI can not only see the problem but also listen to the child’s explanation of where they’re stuck. The AI can then provide hints and guidance through a natural, spoken conversation, rather than just giving away the answer.

Transforming Content Creation

The creative landscape is set for a massive overhaul. A marketing team could brainstorm an ad campaign by describing a scene, and the AI could generate a video storyboard with accompanying music and voice-over scripts. A musician could hum a melody, and the AI could orchestrate it into a full-fledged song. Multimodal ChatGPT & generative AI tools will act as powerful creative amplifiers.

Enhancing Accessibility

For individuals with disabilities, multimodal AI is a life-changing technology. A visually impaired person could use their phone to navigate a new environment, with the AI describing their surroundings in real-time. It could read menus, identify products on a shelf, or describe the expressions on people’s faces during a conversation, fostering greater independence and connection.

The Challenges and Ethical Considerations

With great power comes great responsibility. The rise of multimodal AI also brings a new set of complex challenges and ethical dilemmas that we must navigate carefully.

The Potential for Misinformation

The same technology that can generate helpful videos can also be used to create highly realistic deepfakes and misinformation at an unprecedented scale. The ability to clone voices from just a few seconds of audio and sync them with a fabricated video presents a significant threat to trust and security.

Bias in Multimodal Data

AI models are trained on data from the real world, and that data contains human biases. A model trained on datasets where certain demographics are underrepresented may perform poorly for those groups. In a multimodal context, these biases can manifest in visual recognition, voice analysis, and other areas, potentially reinforcing harmful stereotypes.

Privacy Concerns

An AI that is always ‘on’—seeing through our cameras and listening through our microphones—raises profound privacy questions. How will this data be used? Who has access to it? Establishing robust privacy safeguards and transparent policies will be absolutely critical to building public trust.

Conclusion: Let’s Start the Conversation

We are at the very beginning of the multimodal AI journey. The shift from text-based interaction to a rich, seamless conversation involving sight, sound, and language is as significant as the move from command-line interfaces to graphical user interfaces. This new generation of ChatGPT & generative AI will unlock untold possibilities, making technology more intuitive, accessible, and powerful than ever before. While we must proceed with caution, addressing the ethical challenges head-on, the potential for positive transformation is immense. The future of AI is not just about writing and reading; it’s about understanding our world in all its rich, multifaceted glory. How do you think this multimodal revolution will change your industry or your daily life? The conversation is just beginning.